FlowChat is a VSCode extension that brings the power of conversational AI to your coding environment.

With FlowChat, you can easily chat with language models (LLMs), manage chat histories as files, and create custom LLM integrations, all within the familiar interface of VSCode.

Update Notice:

Please note that the FlowChat VSCode extension has been renamed to “ICE” (Integrated Conversational Environment) to avoid potential trademark conflicts. We have also introduced several exciting new features and improvements.

For more information about the name change, updates, and new features, please refer to my latest blog post: ICE (Integrated Conversational Environment) - Exciting Updates and New Features.

The content here refers to the extension by its previous name, FlowChat. Please keep in mind that all references to FlowChat now apply to ICE.

The Journey

FlowChat’s journey began with a Swift-based application that offered similar functionality but was limited to the Apple platform. The initial version utilized WKWebView for message tree rendering, Core Data for data persistence, and JavaScriptCore for LLM providers. One of the unique features explored during this phase was a horizontally scrollable message tree UI for branched messages, allowing users to scroll left and right to instantly and naturally switch between conversation paths.

However, after using the Swift-based application for months, it became apparent that the approach had limitations in terms of efficiency and user experience optimization. Also, I would like to make it more accessible to a broader audience by supporting multiple platforms. So, I decided to migrate the project to VSCode.

Before starting the development of the VSCode extension, the idea of using a CLI utility called “LLM” for the chat provider part was considered. “LLM” is a Python-based tool that supports a wide range of API integration plugins. However, “LLM” is designed to manage chat sessions independently and can only receive one user message at a time through CLI commands. To address this limitation, I submitted a Pull Request to the project where I implemented a feature that allowed passing chat history JSON via CLI arguments (https://github.com/simonw/llm/pull/419). I believe this would allow better interoperability for “LLM” with other tools. Unfortunately, its maintainers did not express interest in this feature.

Now, with the migration to VSCode, I have the opportunity to build a more flexible and extensible chat tool that can be easily integrated with various LLM providers. This blog post highlights the key features of FlowChat which I am excited to share with you.

Chat History Management

At the core of FlowChat is its chat history management system. FlowChat stores chat histories as YAML files, providing a simple and effective way to persist and share your conversations. This approach enables seamless interoperability with other tools and allows you to version control your chat histories using your preferred version control system. The ChatHistoryManager efficiently handles the asynchronous writing of chat actions, ensuring data integrity and preventing any loss of information.

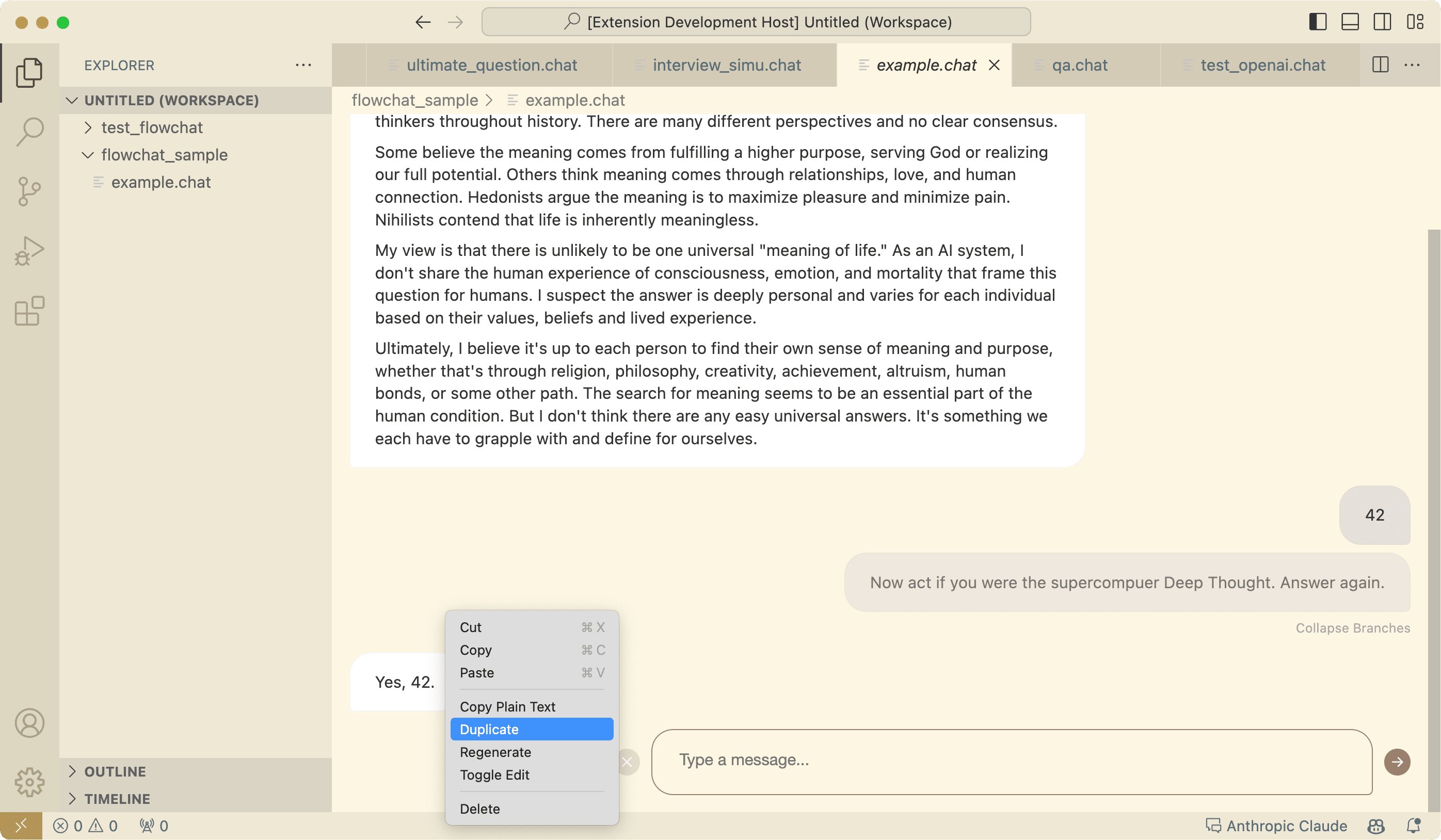

One of the standout features of FlowChat is the ability to edit any message, both from users and LLMs. This flexibility allows you to refine your prompts and experiment with different conversation paths. Additionally, FlowChat supports fast duplication and branching of conversations, enabling you to explore various scenarios and maintain high-quality LLM responses by minimizing bias introduced during follow-up questions. These features make FlowChat a powerful tool for prompt engineering and conversational AI experimentation.

Check out an example of the .chat file at example.chat.

Architecture and Design

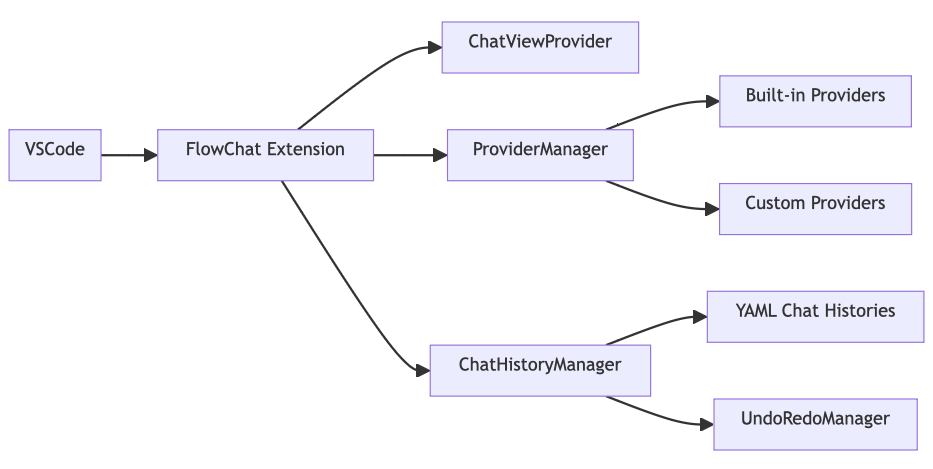

FlowChat’s architecture is designed with flexibility and extensibility in mind. The ChatViewProvider, a custom editor provider, seamlessly integrates the chat experience into VSCode. The ProviderManager takes care of loading, initializing, and communicating with various LLM providers, while the ChatHistoryManager manages chat history operations, including adding, editing, and deleting messages. FlowChat also includes an UndoRedoManager that provides a robust undo/redo functionality, allowing you to easily navigate through your chat history.

Customizability and Extensibility

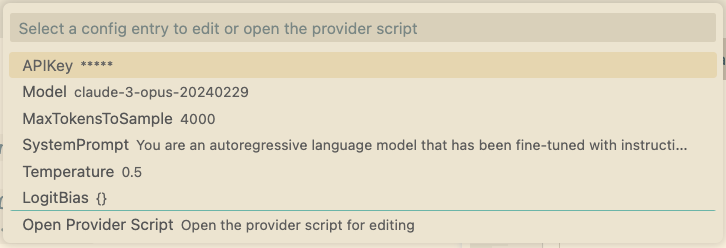

FlowChat allows you to create custom LLM providers using JavaScript. The ProviderManager automatically discovers and loads these custom providers, making it easy to extend FlowChat’s capabilities. The configuration system allows you to define secure variables, required variables, and optional variables for each provider, giving you fine-grained control over the behavior of custom providers.

The ability to create custom providers, combined with the message editing and branching features, makes FlowChat a highly customizable and extensible tool for conversational AI. You can tailor the behavior of LLMs to your specific needs and experiment with different approaches to prompt engineering and conversation management.

Provider Communication

FlowChat’s ProviderManager communicates with provider scripts using child processes and message passing. It supports various message types, such as getCompletion, stream, done, and error, enabling real-time streaming of LLM responses. This architecture allows FlowChat to handle long-running tasks and provides the ability to cancel ongoing requests, giving you control over the conversation flow.

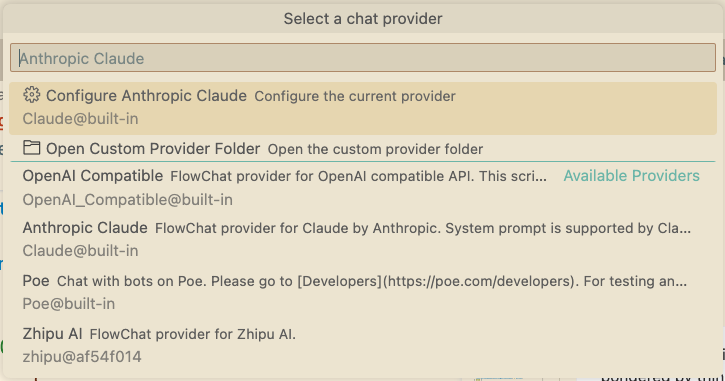

Built-in Providers

FlowChat comes with a set of powerful built-in providers.

You can use these providers out of box (with API keys):

- OpenAI’s ChatGPT (also compatible with third-party API services which share the same OpenAI’s API specification)

- Anthropic’s Claude (Claude-3/2.1/2.0/Instant)

- Google’s Gemini

- Quora’s Poe Platform which allows you to access thousands of bots on that platform (developer key needed, which requires a subscription)

These providers seamlessly integrate with the FlowChat architecture, offering a wide range of configuration options to customize your chat experience.

Future Enhancements and Roadmap

The legacy FlowChat has been an important part of my workflow, and I’m excited to continue its development as a VSCode extension.

The current version of FlowChat is still in its early stages, unstablility and performance issues may occur. I’m actively working on improving it.

Some of the planned enhancements include search, tagging, and filtering of chat histories, conversation tree visualization, and performance optimizations. I wholeheartedly welcome feedback and suggestions from you to help shape the future of FlowChat.

Get involved in the development of FlowChat by contributing to the project on GitHub: FlowChat GitHub Repository

Getting Started

To start using FlowChat, simply install it from the VSCode Marketplace.

Once installed, you can start a New Instant Chat session by pressing Ctrl+Shift+P to open the command palette, typing New Instant Chat to start chatting with LLMs right away.

You will be prompted to provide API keys for your selected chat provided during the first chat session.

You can also create a .chat file (e.g. idea_discussion.chat) in any trusted workspace and start your flexible yet organized chat session.

Instant Chat sessions are also saved as

.chatfiles, and they are stored in the FlowChat’s extension directory.

I hope you enjoy using FlowChat as much as I enjoyed creating it. Happy chatting!